Aerial drones deliver sweet spot for HAB research at VIMS

‘Eyes in the sky’ help guide water sampling from research vessels

Harmful algal blooms or HABs are notoriously difficult to sample. They can appear abruptly when growing conditions are right, and disappear just as quickly when conditions deteriorate. They also shift with tides and currents, or even the wake of a passing vessel.

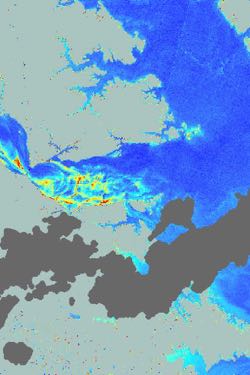

Now, researchers at the Virginia Institute of Marine Science are using aerial drones as ‘eyes in the sky’ to guide their seafaring colleagues to the densest bloom patches, allowing those onboard VIMS vessels to collect water samples with much greater efficiency and lower cost. VIMS scientists analyze those samples to identify whether they contain toxic algal species that might threaten marine life or human health.

Now, researchers at the Virginia Institute of Marine Science are using aerial drones as ‘eyes in the sky’ to guide their seafaring colleagues to the densest bloom patches, allowing those onboard VIMS vessels to collect water samples with much greater efficiency and lower cost. VIMS scientists analyze those samples to identify whether they contain toxic algal species that might threaten marine life or human health.

Dr. Donglai Gong, an assistant professor at VIMS, says the drones provide a “sweet spot” in studying HABs, flying high enough to give a bird’s-eye view, but low enough to discern even small-scale streaks and patches in exquisite detail. They complement the other lofty tools that VIMS researchers use to visualize blooms—photos from single-engine aircraft and satellite imagery from NASA.

“The plane and satellite give you much broader spatial coverage,” says VIMS professor Kim Reece, who has been studying HABs for more than a decade. “We can fly over the entire lower Chesapeake Bay. But it’s at a higher altitude, so you don’t get the resolution that you do with the drone.”

“Another factor is cost,” says Gong. “You can get a very nice drone for a few thousand dollars,” whereas it typically costs hundreds of dollars per hour to operate a single-engine plane. Also, his team can deploy their drone in a matter of minutes, providing the opportunity to investigate a York River bloom almost immediately after it’s been observed from the VIMS campus or a vessel.

Gong and his team use two drones for visualizing HABs. The newest, a DJI Phantom 4 Pro Quadcopter, can fly at 45 mph and for 30 minutes before its lithium-ion batteries need recharging. Its camera is equipped with a 20-megapixel sensor that can shoot both high-resolution still images and video. Gong and fellow drone pilot Lydia Bienlien, a Ph.D. student in William & Mary’s School of Marine Science at VIMS, download and share the images in real-time via a cellular connection.

Gong and his team use two drones for visualizing HABs. The newest, a DJI Phantom 4 Pro Quadcopter, can fly at 45 mph and for 30 minutes before its lithium-ion batteries need recharging. Its camera is equipped with a 20-megapixel sensor that can shoot both high-resolution still images and video. Gong and fellow drone pilot Lydia Bienlien, a Ph.D. student in William & Mary’s School of Marine Science at VIMS, download and share the images in real-time via a cellular connection.

Serendipitous discovery

Gong, a physical oceanographer, didn't set out to visualize algal blooms when he launched the drones earlier this summer. He obtained them in 2014 for studying shorelines and marshes.

“It happened by chance,” says Gong. “I was flying the drone for practice when I noticed there were dark streaks in the water. Being a physicist, I had no idea what biological processes could be causing them. So I took pictures and posted them to a VIMS mailing list, and that’s how we got connected.”

That connection is with Reece, fellow VIMS professor Iris Anderson, and the many other VIMS researchers studying HABs. They have quickly adopted the drone’s real-time visuals to guide their sampling in the York River. “We’d been a little bit blind until we had these new tools,” says Reece.

Anderson is the lead investigator on a recent grant from the National Science Foundation awarded to help better understand and model how algal blooms affect the cycling of carbon through estuaries like the York River. The drone imagery is helping her team better use their own high-tech research instrument.

“Our new grant uses a Dataflow system,” says Anderson. “It attaches to the boat so you can drive at 20 knots down the estuary while pumping water through a series of sensors. In real time you can see, for example, where there’s high chlorophyll suggesting a bloom. At the same time it’s measuring a lot of other variables such as dissolved oxygen, carbon dioxide, and nutrients.”

“Our new grant uses a Dataflow system,” says Anderson. “It attaches to the boat so you can drive at 20 knots down the estuary while pumping water through a series of sensors. In real time you can see, for example, where there’s high chlorophyll suggesting a bloom. At the same time it’s measuring a lot of other variables such as dissolved oxygen, carbon dioxide, and nutrients.”

Measurements from Dataflow also help delineate bloom boundaries. VIMS professor Mark Brush, who brings expertise in estuarine productivity and modeling to the Dataflow sampling and analysis, says "Determining the spatial extent of the blooms is critical to our efforts to model the effect of the blooms on the York River ecosystem."

But keeping the research vessel within the ever-shifting boundaries of a bloom is a challenge. VIMS professor BK Song, who brings expertise in microbiome and nutrient cycling, says “When you’re out on the water, you can’t see if you are in the middle of the bloom or on its edge.”

But keeping the research vessel within the ever-shifting boundaries of a bloom is a challenge. VIMS professor BK Song, who brings expertise in microbiome and nutrient cycling, says “When you’re out on the water, you can’t see if you are in the middle of the bloom or on its edge.”

“That’s why it’s difficult to get samples in the right place,” adds Reece. “The bloom is very dispersed. You can sample 40,000 cells [per milliliter] in one patch and 40 cells outside it—there are really big differences in concentration.” “The chemistry of the water completely changes as well,” says Anderson.

“But with the drone, we can now actually see where those patches are,” says Reece. Gong and Bienlien launch the drone from a high spot on VIMS’ Gloucester Point campus, fly it out over the York River, and call in what they see to Anderson, Song, Brush, and other VIMS researchers to guide their Dataflow path.

Envisioning a broader spectrum

Not content to rest on the drone’s photographic laurels, the VIMS researchers envision an even broader spectrum of use in coming years.

“Like a regular camera, the drone’s optical sensors can measure water color in red, green, and blue,” says Gong. “But ocean-color sensors can measure multiple bands, into the infrared, for example. Having those additional spectral bands can potentially tell you what kind of organism is in the water.”

“Different algae have different pigments,” explains Anderson, “and each one absorbs a certain wavelength of light.” Indeed, previous studies by Reece’s team shows that Cochlodinium polykrikoides and Alexandrium monilatum—the two species that dominate the local summer bloom—have slightly different spectral profiles.

“You can see a difference in color when you’re on the water,” says Reece. “Alexandrium is a little more brown, Cochlodinium a bit more red. Their blooms are a little different and you can tell.”

Reece and Anderson have already begun collaborating with scientists at NASA and NOAA to develop algorithms that would use these spectral differences to distinguish between the two algal species in satellite imagery. Now they want to apply that approach to the drone.

Reece and Anderson have already begun collaborating with scientists at NASA and NOAA to develop algorithms that would use these spectral differences to distinguish between the two algal species in satellite imagery. Now they want to apply that approach to the drone.

“We’ve realized how helpful the drone is, so now we’re looking to improve our capabilities by adding the kind of multispectral sensor NASA has on their satellites for ocean-color measurements,” says Anderson.

“It would be really nice to look at a drone image and be able to say ‘This bloom is Cochlodinium and this one’s Alexandrium,’” says Reece. “If NASA and NOAA can develop an algorithm to distinguish between the species’ spectral profiles, it would be nice to add a multispectral sensor to the drone and use that in addition to the satellite data.”

“That would take us to the next level,” says Gong. “If we had a quadcopter with the right sensors and algorithms, we could tell what kind of organisms are out in the Bay.”